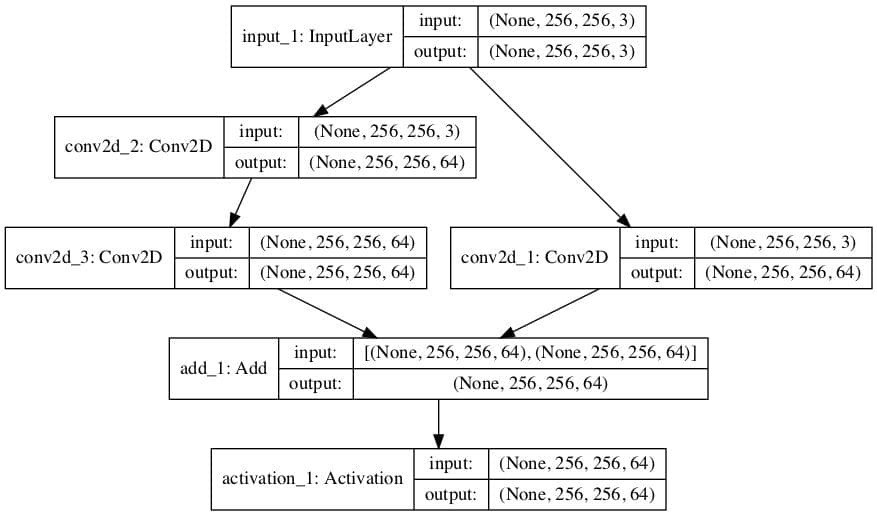

Due to this skip connection, the output of the layer is not the same now. This connection is called ’skip connection’ and is the core of residual blocks. The very first thing we notice to be different is that there is a direct connection which skips some layers(may vary in different models) in between. This problem of training very deep networks has been alleviated with the introduction of ResNet or residual networks and these Resnets are made up from Residual Blocks. You might be thinking that it could be a result of overfitting too, but here the error% of the 56-layer network is worst on both training as well as testing data which does not happen when the model is overfitting. This could be blamed on the optimization function, initialization of the network and more importantly vanishing gradient problem. This suggests that with adding more layers on top of a network, its performance degrades. We can see that error% for 56-layer is more than a 20-layer network in both cases of training data as well as testing data. Here is a plot that describes error% on training and testing data for a 20 layer Network and 56 layers Network. But it has been found that there is a maximum threshold for depth with the traditional Convolutional neural network model. For example, in case of recognising images, the first layer may learn to detect edges, the second layer may learn to identify textures and similarly the third layer can learn to detect objects and so on. The intuition behind adding more layers is that these layers progressively learn more complex features. Mostly in order to solve a complex problem, we stack some additional layers in the Deep Neural Networks which results in improved accuracy and performance. Efficiently trained networks with 100 layers and 1000 layers also.

They observed relative improvements of 28% Replacing VGG-16 layers in Faster R-CNN with ResNet-101.Won the 1st place in ILSVRC and COCO 2015 competition in ImageNet Detection, ImageNet localization, Coco detection and Coco segmentation.Won 1st place in the ILSVRC 2015 classification competition with a top-5 error rate of 3.57% (An ensemble model).

ResNet, short for Residual Network is a specific type of neural network that was introduced in 2015 by Kaiming He, Xiangyu Zhang, Shaoqing Ren and Jian Sun in their paper “Deep Residual Learning for Image Recognition”.The ResNet models were extremely successful which you can guess from the following: In this article, we shall know more about ResNet and its architecture. Here ResNet comes into rescue and helps solve this problem. But, it has been seen that as we go adding on more layers to the neural network, it becomes difficult to train them and the accuracy starts saturating and then degrades also. So, over the years, researchers tend to make deeper neural networks(adding more layers) to solve such complex tasks and to also improve the classification/recognition accuracy.

#Residual neural network series

Over the last few years, there have been a series of breakthroughs in the field of Computer Vision.Especially with the introduction of deep Convolutional neural networks, we are getting state of the art results on problems such as image classification and image recognition.

0 kommentar(er)

0 kommentar(er)